online shoes store xkshoes,here check the latest yeezy shoes click here.

know more about 2020 nike and adidas soccer cleats news,check shopcleat and wpsoccer.

Where, Oh Where

to Test?

Kent Beck, Three Rivers

Institute

Abstract:

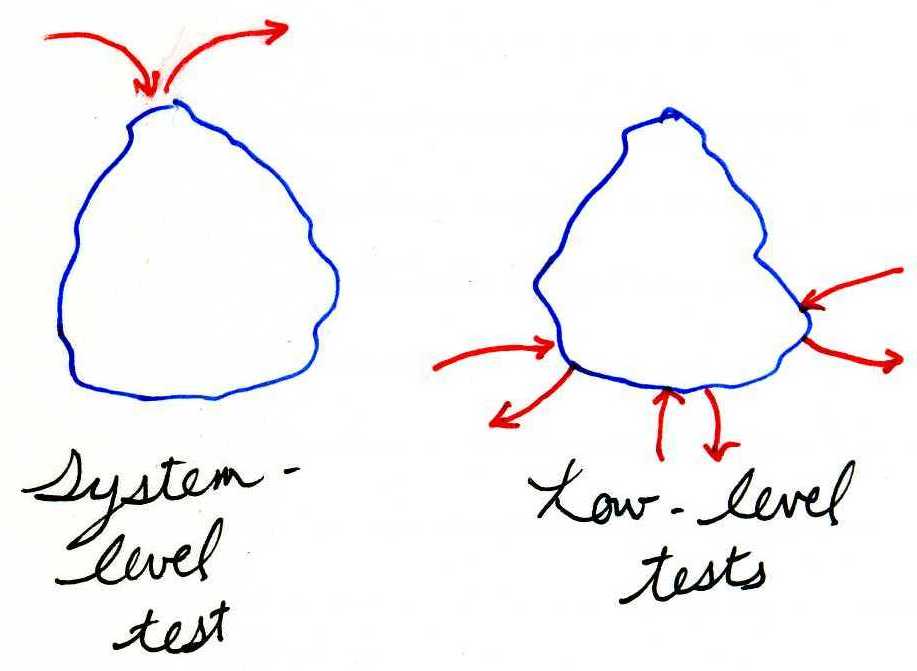

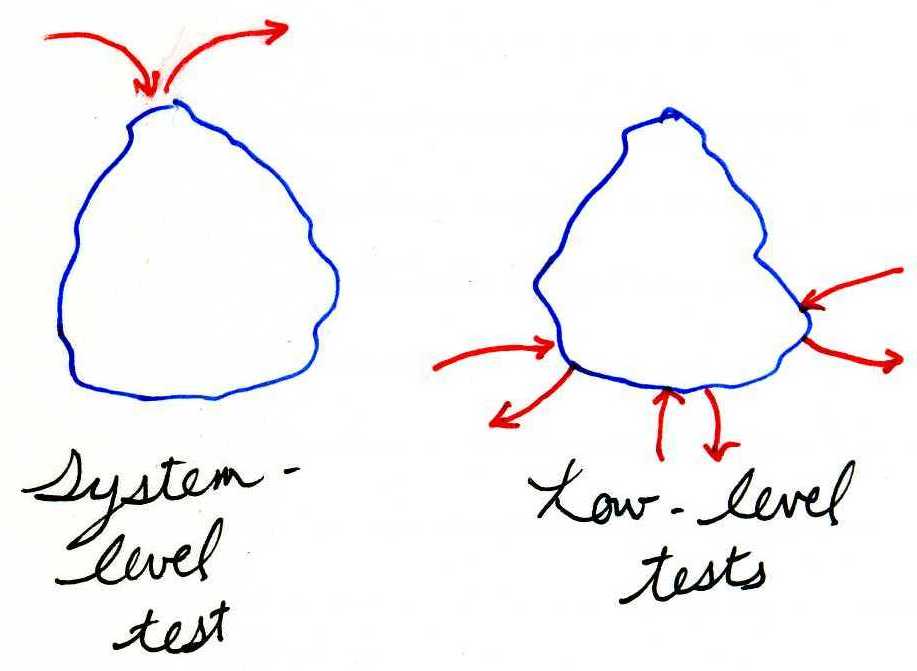

Where should I test? High-level tests give me more coverage but less

control. Low-level tests cover less of the system and can be broken by

ordinary changes, but tend to be easy to write and quick to run. The

answer to, "Where should I test?" is, as always, "It depends." Three

factors influence the most profitable site for tests: cost,

reliability, and stability.

Introduction

What makes software development fascinating after thirty five years is

the conundrums and contradictions I still encounter every programming

day. Another one of these came up the other day. I was programming with

a client when they said they were glad to have system-level tests for

the part of the system that collects payments from customers. The

design and implementation weren't right yet, but with a comprehensive

set of functional tests they were confident that they could replace the

implementation without affecting the user-visible behavior. Now, just

the day before I had gotten stuck because I had comprehensive

functional tests, setting off my Conundrum Alarm. What was going on?

The tests for payment collection were written in

terms of business events: time passes, payments become due, payments

arrive, payments are cancelled, contracts change between the time

payments are requested and when they arrive, and so on. The business

rules related to payment processing is complicated by all the crazy

special cases. At the same time, smoothly handling payments,

automatically wherever possible, is critical to reducing customer

service costs. To write these tests, though, the system required

support for recreating

specific business situations (hand-written tests tend to be too

simple to be realistically exercise the system).

The day before I had encountered a counter-example

to the value of functional tests. I was building a system to translate

quantitative data into the Google Chart API for some curious data I had

collected about how programmers use tests (I'll cover this in a later

post). With the Chart API you supply a URL with various parameters

containing data and formatting and Google gives you a bitmap in return.

I wrote my first test with my input and the URL I expected to generate.

I made it pass. I wrote a second, more complicated test. In making that

test run, I broke the first test and had to fix it. Same story with the

third test--two broken tests, extra work to get them working. Every

time I changed anything in the code, all the tests broke. Eventually I

gave up.

Overnight I had an idea: what if I had little

objects generate the parts of the URL? It should be easy to test those

little objects. Concatenating the results and inserting ampersands I

was confident I could do correctly. Once I got rolling on this new

implementation, the individual objects were so simple I just verified

them manually. (If I wasn't in such a hurry to see the graphs I would

have written automated tests.) In the end, I got the functionality I

wanted quickly with code that only required component-level validation.

To test the system or to test the objects, that was

question on which I now had contradictory evidence. The payment

collection system profited from system-level tests and would have found

object-level tests a burden. The data charting system was just the

opposite, nearly killed by the burden of system-level tests. How could

I productively think of this situation?

Cost, Stability, Reliability

On reflection, I realized that level of abstraction was only a

coincidental factor in deciding where to test. I identified three

factors which influence where to write tests, factors which sometimes

line up with level of abstraction, but sometimes not. These factors are

cost, stability, and reliability.

Cost is an important determiner of where to test,

because tests are not essential to delivering value. The customer wants

the system to run, now and after changes, and if I could achieve that

without writing a single test they wouldn't mind a bit. The testing

strategy that delivers the needed confidence at the least cost should

win.

Cost is measured both by the developer time required

to write the tests and the time to execute them (which also boils down

to developer time). Effective software design can reduce the cost of

writing the tests by minimizing the surface area of the system.

However, setting up a test of a whole system is likely to take longer

than setting up a test of a single object, both in terms of developer

time and CPU time.

In the payment collection case, writing the

system-level tests was expensive, but made cheaper by the

infrastructure in place for recording and replaying such tests.

Executing such tests takes seconds instead of milliseconds, though.

However, since no smaller scale test provides confidence that the

system as a whole runs as expected, these are costs that must be paid

to achieve confidence. In the charting example, setting up tests was

simple at either level, and running them was lightning fast in either

case.

Stability is an aspect of cost: given a test that

passes, how often does it provide incorrect feedback? How often does it

fail when the system is working, or pass when the system has begun to

fail? Every such case is an additional cost that should be charged to

the test, and minimizing these costs is a reasonable goal of testing.

The payment collection system didn't have a problem with stability.

Business rules changed occasionally, but when they changed the tests

needed to change at the same time. The data charting system had a

serious stability problem, though. Tests were constantly breaking even

though the part of the system they validated was still working.

I could have spent more effort on my charting tests,

carefully validating only parts of the URL. This is a strategy often

employed when the expected output of a system is HTML or XML: check to

make sure a particular element is present without checking the entire

output. Partial validation increases the cost of testing, because you

have to implement the mechanism to separate out the parts of the output

and check them separately, and it reduces coverage. That is, it is

possible for an output whose parts all check out to be incorrect as a

whole. However, this risk may be worth it compared to having

system-level tests continually fail because of irrelevant changes to

the output.

Reliability is a third factor in deciding where to

test. I should test where I'm likely make mistake, and not things that

always work. Each test is a bet, a probabilistic investment. If it

succeeds or fails unexpectedly, it pays off. Since it's probabilistic,

I can't tell with certainty beforehand which ones I should write.

However, I can certainly track trends and decide to concentrate where I

make mistakes (for me, I tend to make sense of test errors, so I'm

particularly careful testing around logic requiring conditionals).

The challenging part of the payment collection

system is the overall system behavior. Components like sending an email

or posting an amount to an account are reliable. It's putting all the

pieces together that creates the opportunity for errors. On the basis

of reliability, then, the payment system will find system-level tests

more valuable. The data charting system reverses this analysis. Getting

the commas and vertical bars in just the right places is error prone.

Given a sequence of valid parameters, though, putting them together is

trivial. Tests of the components are just as likely to pay off as tests

of the whole URL, so the advantage goes to the cheaper tests.

Conclusion

I usually prefer low-level tests in my own practice, I think because of

the cost advantages. However, this preference is not absolute. I often

start development with a system-level test, supported by a number of

low-level tests as I add functionality. When I've covered all the

variations I can think of by low-level tests, I seed further

development with another system-level test. The result is a kind of

"up-down-down-down-up-down-down" rhythm. The system-level tests ensure

that the system is wired together correctly, while the low-level tests

provide comprehensive confidence that the various scenarios are covered.

Our work on JUnit tilts this balance towards

system-level tests. Writing and running system-level tests is generally

economical, both in programmer and execution time. Simulating test

conditions, normal and otherwise, is generally easy. Our tests tend to

exercise the system through the published API

(JunitCore.run(classes...);). A fourth of our tests (116/404 as of

today) are written at the system level.

When I'm working on Eclipse plug-ins, the balance

tilts the other way. System-level tests tend to be cumbersome and run

slowly, so I work hard to further modularize the system so I can write

comprehensive low-level tests. My goal is to have reasonable confidence

that my plug-in works without starting Eclipse. Some system-level tests

are necessary in the whole suite, if only to validate assumptions my

code makes of Eclipse, assumptions that may change between releases.

Large-scale and small-scale tests both have their

place in the programmer's toolbox. Identifying the unique combination

of cost, stability, and reliability in a given situation can help to

place tests where they can do the most good for the least cost.